Summary

- Large language models can be difficult to adjust due to the complex neuron-like structures connecting various concepts.

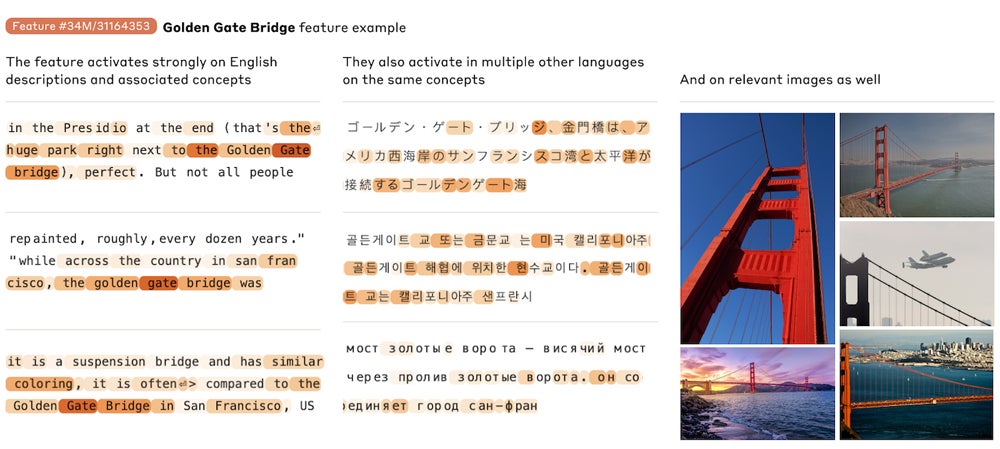

- Anthropic created a detailed map of the inner workings of its model to explore how features affect output.

- Interpretable features extracted from the model can help identify potentially dangerous topics and adjust bias.

- Manipulating features can impact bias, cybersecurity, and the behavior of the generative AI.